🪤 The Hidden Cost of Outdated API Specs (for Go Developers)

The Story: “It works in Postman… why does the client 500?” 🤔

It started like many integrations. A partner’s OpenAPI doc looked clean. I wired a Go client, shipped to staging, and—boom—mysterious 500s 💥. Spec said amount was an integer; server wanted a stringified decimal with currency. Error examples? Zero. We opened a ticket. They patched the spec a week later—after we had built retries, fallbacks, and monitoring around a ghost 👻.

That week wasn’t just annoying. It was real cost: lost time, brittle workarounds, extra on-call noise, and frayed trust. Outdated specs are a tax we keep paying 💸.

TL;DR: The Tool & The Vision 🎯

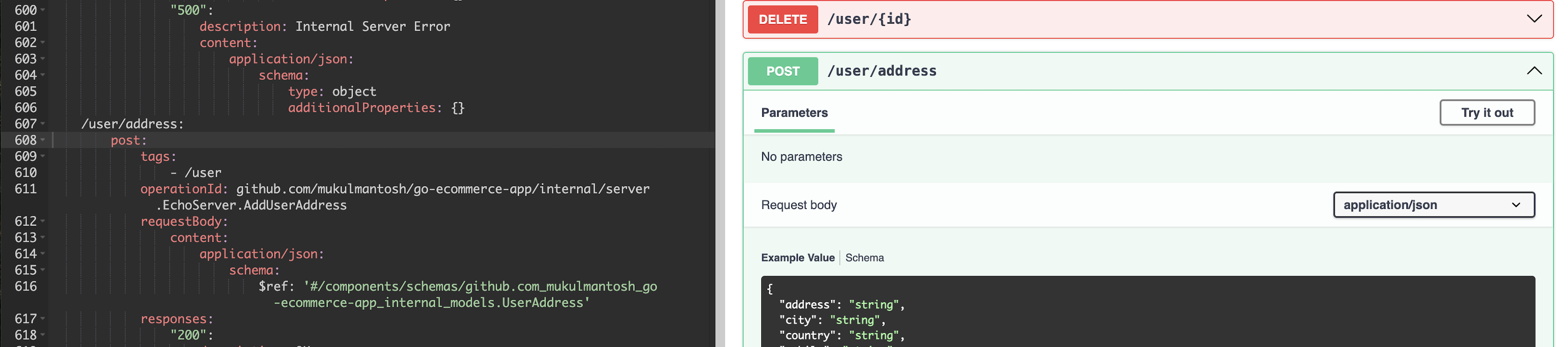

apispec is an early experiment in making your Go code the single source of truth for API documentation. Instead of maintaining separate specs or annotations, it analyzes your actual handlers, types, and middleware to generate OpenAPI 3.1 specifications automatically—where your running code is so expressive that documentation becomes impossible to get wrong. Currently supports Gin, Echo, Chi, Fiber, and net/http using AST analysis and call graphs.

What’s a Spec, Really? (OpenAPI in 30 seconds) ⚡

An API spec is your contract: endpoints, schemas, auth, errors, and expectations captured in a machine‑readable format. OpenAPI (formerly Swagger 😉) is the de‑facto standard for REST: one YAML/JSON file that can fuel docs, clients, servers, mocks, tests, and governance.

When the spec is right, teams move faster in parallel. When it drifts, every consumer pays 💸.

Spec‑First vs Code‑First (and where reality intervenes) ⚖️

Spec‑First (Design‑First) 📝

You manually write the OpenAPI spec first, then use tools to generate server code, handlers, and types from that specification.

Pros:

- Up‑front contract enables parallel work (backend, frontend, SDKs, QA)

- Enables early review: naming, consistency, breaking change detection

- Auto‑generate mocks, tests, and reference docs from day 0

- Great for platform teams, partner APIs, multi‑language SDKs

- Generated code enforces the contract by design

Cons:

- Front‑loaded design time; can feel slow for greenfield spikes

- Manual YAML/JSON editing can be tedious and error-prone

- Learning curve for OpenAPI spec writing and tooling

- Generated code structure may feel rigid for some developers

- Iteration cost: you may need multiple spec tweaks to get desired generated code; requires learning generator nuances and exploring options/templates

Benefits for CI/CD:

- No drift by design—implementation matches spec because it’s generated from it

- Spec linting catches issues before code generation

- Breaking changes are explicit spec changes, easy to review in PRs

Popular spec‑first tools for Go:

- Goa: Design-first framework with its own DSL, generates complete servers and clients.

- go-swagger: Generates servers/clients from OpenAPI 2.0 specs.

- oapi-codegen: Generates Go code from OpenAPI 3.x specifications.

- ogen: OpenAPI 3.0/3.1 code generator for Go (server and client) with strong type-safety and validation.

- OpenAPI Generator: Java-based tool that generates Go servers/clients from OpenAPI v2/v3 specs.

Design/documentation tools: Stoplight, Redocly, SwaggerHub, Postman’s API Builder.

Validation tools: Spectral (linting), openapi-diff (breaking changes), Dredd/Prism (contract tests/mocks).

Code‑First (Implementation‑First) 💻

You write your API handlers first, then generate the OpenAPI spec from the implemented code. There are two main approaches:

1. Framework Wrappers (e.g., Fuego) 🔥 Tools that provide their own API framework/wrappers that automatically infer specs from function signatures and types.

Pros:

- No annotations needed; spec generated automatically from code structure

- Type safety enforced by the framework

- Fast iteration; change code, spec updates automatically

Cons:

- Framework lock-in; must use their specific API patterns

- Limited to what can be inferred from Go types and signatures

- Learning curve for framework-specific patterns

2. Annotation/Comment-Based (e.g., swag) 📝 Tools that scan your existing code for special comments/annotations to generate specs.

Pros:

- Works with existing frameworks (Gin, Echo, Chi, Fiber)

- No framework lock-in; just add annotations to existing handlers

- Can retrofit onto legacy APIs

Cons:

- Annotation sprawl: you must write and maintain comments above handlers

- Easy to forget updating annotations when handlers change

- Reviewers often miss annotation changes in code diffs

- Learning curve for annotation syntax and OpenAPI mapping

Note: The staleness/drift risk primarily affects annotation/comment-based code-first. Framework wrappers that infer from code (e.g., Fuego) keep specs aligned with implementation by construction.

Popular code‑first tools for Go:

- Fuego: Modern Go framework that generates OpenAPI 3 specs from code signatures using generics.

- swaggo/swag: Generates OpenAPI docs from Go annotations/comments.

- Gin/Echo/Chi/Fiber with swag integration for annotation-based documentation.

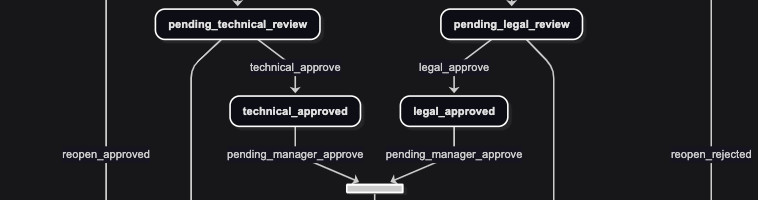

The Real Enemy: Drift 🎯

Docs drift is not a tooling problem alone—it’s a process problem. The cure is feedback loops that fail fast when contract and code diverge.

Must‑have guardrails:

- Spec linting: Catch naming inconsistencies, missing auth, pagination patterns, and error definitions

- Breaking change detection: Diff specs across PRs; block breaking changes without a version plan

- Contract tests: Run requests derived from the spec against your service or mocks

- Client/SDK generation: Generate clients in CI to catch schema breakage immediately

- Examples as test fixtures: Treat examples as executable tests, not decoration

We’ll introduce a code-centric approach next that aims to reduce drift by making the spec a byproduct of code.

Weaving It Into Go Workflows 🔧

net/http,Gin,Echo,Chi,Fiber: pick your poison, but standardize middleware for auth, errors, pagination, correlation IDs- Define canonical error shapes; document and test them

- Keep examples next to handlers or in fixtures—use them to power both docs and tests

Versioning & Deprecation: The Art of Breaking Gracefully 📈

API versioning isn’t just about adding /v2 to your URLs. It’s about managing change while maintaining trust with your consumers.

Versioning Strategies

URL Versioning (/api/v1/users, /api/v2/users):

- ✅ Clear separation, easy to understand

- ❌ URL pollution, harder to maintain multiple versions

Header Versioning (Accept: application/vnd.api+json;version=2):

- ✅ Clean URLs, flexible

- ❌ Less discoverable, requires client changes

Query Parameter (/api/users?version=2):

- ✅ Simple to implement

- ❌ Can be forgotten, cached incorrectly

Deprecation Policy Best Practices

🗓️ Clear Communication Timeline

v1.0 → v1.1 (6 months notice) → v2.0 (deprecation) → v2.1 (sunset)

Explanation: Give consumers at least 6 months notice before deprecating features. Announce deprecation in v1.1, implement breaking changes in v2.0, and completely remove deprecated features in v2.1.

Key principles from industry leaders:

- No surprises: Set clear timelines and stick to them

- Multiple channels: Use changelogs, emails, and API documentation

- Semantic versioning: Use MAJOR.MINOR.PATCH to signal change impact

🛤️ Gradual Migration Path

- Backward compatibility: Add new endpoints, don’t change existing ones; use default values for new optional parameters

- Migration guides: Provide clear documentation with code examples and step-by-step instructions

- Feature flags: Test changes with subset of users before full rollout

- Aliases: Keep old field names, add aliases for new ones to ease transitions

📊 Monitoring & Analytics

- Usage tracking: Monitor deprecated endpoint usage to identify active consumers

- Proactive outreach: Contact consumers still using deprecated features

- Alert systems: Detect unexpected usage spikes that may indicate integration issues

- Smaller, frequent releases: Give your API “small tune-ups” instead of major overhauls to reduce disruption

🤝 Consumer Support

- Documentation: Maintain clear deprecation timelines and migration alternatives

- SDK updates: Provide automated migration paths through updated client libraries

- Enterprise support: Offer dedicated assistance for high-value customers

- Sandbox environments: Let developers test new versions before they go live

- Rollback information: Explain how to switch back if needed

📚 Learn more: API Versioning Strategies: Best Practices Guide - includes real-world examples from Twitter, GitHub, Google Maps, and Facebook, plus lessons learned from versioning failures

The Real Source of Truth: A Paradigm We’re Still Building 🏗️

Here’s the uncomfortable truth: your running code is the only source of truth that matters 💯. Specs can lie. Comments can be wrong. But the code that handles production requests? That’s reality.

Yet we’ve built an entire ecosystem around maintaining parallel representations of that reality. We write specs, then code. Or code, then annotations. We lint specs, diff specs, test against specs. But what if the fundamental problem isn’t tooling—it’s that we’re treating symptoms instead of the disease? 🤔

The dream: Code that is so expressive, so self-documenting, that the OpenAPI spec becomes a natural byproduct, not a separate artifact to maintain. Where the type system, function signatures, and error handling patterns tell the complete story of your API contract.

Toward Code as the Single Source of Truth 🎯

This is where apispec comes in—not as another tool, but as a step toward a different paradigm. Instead of asking developers to maintain two representations of the same API, what if we could make the code itself the definitive contract?

The vision 🌟:

- Your Go handlers, types, and middleware patterns contain all the information needed for a complete OpenAPI spec

- No annotations, no comments, no parallel YAML files—just expressive, well-structured code

- The spec becomes a live reflection of what your API actually does, not what you intended it to do

What apispec does 🔧:

- Analyzes Go code and automatically generates OpenAPI 3.1 specs (YAML or JSON)

- Detects routes for popular frameworks (Gin, Echo, Chi, Fiber, net/http, Gorilla Mux)

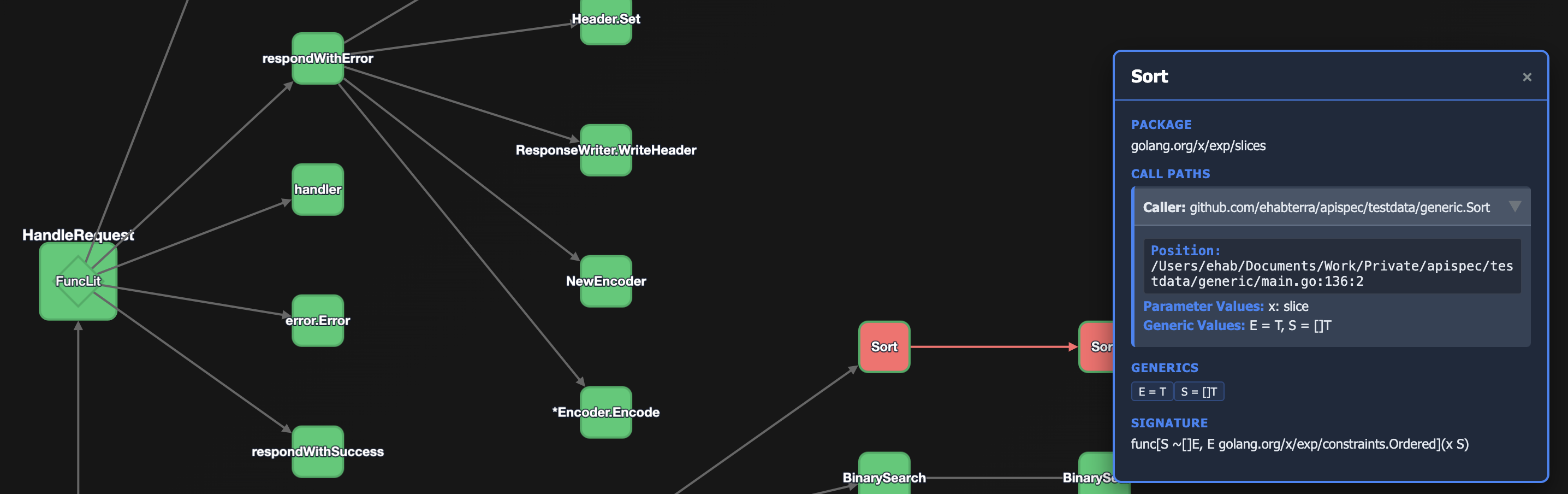

- Follows call graphs to final handlers and infers request/response types from real code

- Uses AST analysis, type checking, and pattern matching to understand API structure

- Provides configurable YAML patterns for different frameworks and custom type mappings

# Basic usage

apispec --output openapi.yaml # Generate spec

apispec --config custom.yaml --output spec.yaml # Custom patterns

apispec --diagram diagram.html --write-metadata # Debug with call graph

The tool performs comprehensive static analysis: it loads and type-checks all Go packages, detects the web framework automatically, builds a call graph to trace execution flow, and maps discovered patterns to OpenAPI 3.1 specifications. It handles complex Go features like generics, aliases, enums, and nested structs while providing safeguards against infinite recursion in large codebases.

Note: APISpec is under active development and not yet production‑ready.

The Challenge Ahead 🚧

This isn’t just a tooling problem—it’s a paradigm shift. It requires:

- Consistent patterns: Go APIs written in ways that tools can understand and extract contracts from

- Community alignment: Agreement on how to structure handlers, errors, middleware for maximum expressiveness

- Tooling maturity: Sophisticated analysis that can infer complex API behaviors from code structure

This is just the beginning 🌱. apispec is an early experiment in making code the authoritative source of truth. It’s imperfect, incomplete, and will need significant community effort to reach its potential.

But imagine a world where your API documentation is always accurate—because it’s impossible for it to be otherwise. Where breaking changes are caught not by comparing specs, but by analyzing the actual code changes. Where onboarding a new developer means reading the handlers, not hunting through YAML files.

That’s the paradigm worth building toward. And it starts with tools that treat your code as the source of truth it already is.

Quick Checklist You Can Adopt Today ✅

- Decide your primary truth: spec‑first for external/partner/public APIs; code‑first for internal spikes

- Why: External APIs need stable contracts for consumers; internal APIs can iterate faster with code-first

- Add spec linting and diff to CI: Use tools like Spectral to catch naming inconsistencies, missing auth, and breaking changes

- Why: Prevents spec drift and catches issues before they reach production

- Add contract tests: Run requests derived from your spec against your service or mocks

- Why: Ensures your implementation actually matches what the spec promises

- Bonus: Consider Consumer-Driven Contracts (CDC) with tools like

Pactfor consumer-focused validation

- Version intentionally: Document deprecations and breaking changes with clear migration paths

- Why: Gives consumers time to adapt and maintains trust in your API

- How: Use semantic versioning, provide 6+ month deprecation notices, maintain migration guides

- Automate client/SDK generation: Generate clients in CI to catch schema breakage immediately

- Why: Forces you to think about breaking changes before they happen

Why This Matters for Go Developers 🐹

Go’s type system, interfaces, and growing use of generics make it feasible to infer rich API contracts directly from code—especially with consistent patterns for request/response types and error envelopes. Leaning into code-as-contract means fewer parallel artifacts to maintain, fewer surprises for consumers, and better leverage of Go’s strengths.

Closing Thought 💭

Outdated specs are a silent tax 💸. Whether you design first or code first, build the rails that keep your contract and implementation from drifting apart. Tools help. Process makes it stick. If you write Go, give apispec a spin and make your spec a living truth again. 👉 Get started with apispec on GitHub

References

- Goa – Design‑First for Go

- go-swagger – Swagger/OpenAPI 2.0 toolkit

- oapi-codegen – OpenAPI 3.x to Go

- Fuego – Generate OpenAPI 3 from Go code

- swaggo/swag – OpenAPI from Go annotations

- APISpec – Generate OpenAPI 3.1 from Go code

- Spectral – OpenAPI Linter

- Pact – Consumer-Driven Contracts

- API Versioning Strategies: Best Practices Guide